Let’s break it down into categories of savings and actual numbers (approximate, based on edge-device benchmarks) using decentralized intelligence.

Categories of Energy Reduction

| Source of Savings | Without IPFS | With IPFS Memory | Estimated Power Reduction |

|---|---|---|---|

| Recomputation of past context | DexI re-processes every frame/text/prompt | Just loads CID data | 30–60% CPU/GPU reduction |

| Redundant data transmission | Constant sync with cloud/storage | One-time CID push | 50–90% bandwidth savings |

| Storage efficiency | Full state logs or full vectors | De-duplicated CID content | 30–70% disk I/O savings |

| Cross-node memory sync | Cloud calls or constant socket relay | CID sync or gossip | 40–70% network power drop |

| Model context re-embedding | Re-encode context every time | Fetch precomputed embeddings | 20–50% model-side savings |

Real-World Edge Device Example

Assume a Raspberry Pi 4 running a local LLM with llama.cpp:

- Without IPFS Memory:

- 4W–6W idle → 8W–10W under load

- Every new prompt re-embeds 500 tokens

- Constant writes to disk or API calls

- With IPFS Memory:

- Keeps CID cache in RAM

- Reuses past vectorized memory

- Offloads sync to batch later

📉 Result: Estimated 2.5W–4W reduction per active task, or 25–40% total energy savings in edge AI workflows.

Power Savings Compound with More Agents

The more memory entries shared via IPFS:

- The less each agent needs to compute.

- The more tasks become fetch-and-act rather than think-from-scratch.

Think of it like:

Dexi agents become memory-driven actors, not compute-driven engines.

Overall Power Reduction Estimate

| Deployment Scenario | Estimated Reduction |

|---|---|

| Single Dexi agent on edge | 20–40% |

| Multi-agent mesh with CID memory | 40–70% |

| Validator + CID + SafeSignal hybrid | Up to 80% of prior compute/network usage offloaded |

TL;DR

Storing and using AI memory on IPFS can reduce edge compute power draw by 25–70%, especially in real-time systems like drones, sensors, or smart cameras. The savings multiply with multi-agent or offline mesh deployments.

AI vs. DexI — Reframing the Narrative

| Feature | Traditional AI | DexI (Decentralized Intelligence) |

|---|---|---|

| Ownership | Big Tech | Individuals, communities, nodes |

| Location | Centralized data centers | Edge devices, mesh relays |

| Power usage | High, opaque | Energy-aware, off-grid capable |

| Privacy | Cloud-based, often invasive | Local-first, user-controlled |

| Memory | Ephemeral or vendor-owned | Verifiable, user-owned on IPFS |

| Explainability | Often black-box | Transparent, auditable CID history |

| Trust model | “Trust us” | “Trust math, code, MAC, and mesh” |

Why DexI ≠ AI

DexI is not “just decentralized AI” — it’s a philosophical and architectural shift:

- Not about smarter agents, but about freer ones

- Not scaling model size, but scaling agency

- Not Big Tech tools, but mesh-native tools

Why It Works:

- “AI” is now linked to:

- Surveillance

- Corporate control

- Job displacement

- Regulatory panic

- “DexI” is a clean break:

- Built on community relays, not secret APIs

- Rewards local inference, not cloud training

- Allows trust-minimized logic (like Proof of Relay/Memory)

- Runs in places AI is banned or feared (war zones, off-grid)

DexI isn’t just the next phase of AI. It’s a reset. A chance to define intelligent systems as distributed, verifiable, privacy-respecting, and community-owned. Frame it not as “alternative AI” but as the natural evolution of intelligence infrastructure.

Per-Device Energy Cost (Monthly)

| Task (Inference/Usage) | Centralized AI (Cloud) | DexI (Edge/Local) |

|---|---|---|

| Power Draw | 250–400W (GPU node) | 3–15W (Pi/Edge SoC) |

| Energy Use (24/7) | ~180–290 kWh/month | ~2.2–11 kWh/month |

| Energy Cost (@$0.12/kWh) | ~$22–35/mo | ~$0.30–$1.30/mo |

DexI can save up to ~95%+ on energy per node compared to cloud inference.

National Impact (U.S. Case)

Assumptions:

- 100 million active AI-connected devices (smartphones, agents, sensors)

- Cloud-based AI costs:

- $30/month per user (energy + infra + bandwidth)

- DexI cost:

- $1/month per user (edge node, MAC-auth, IPFS)

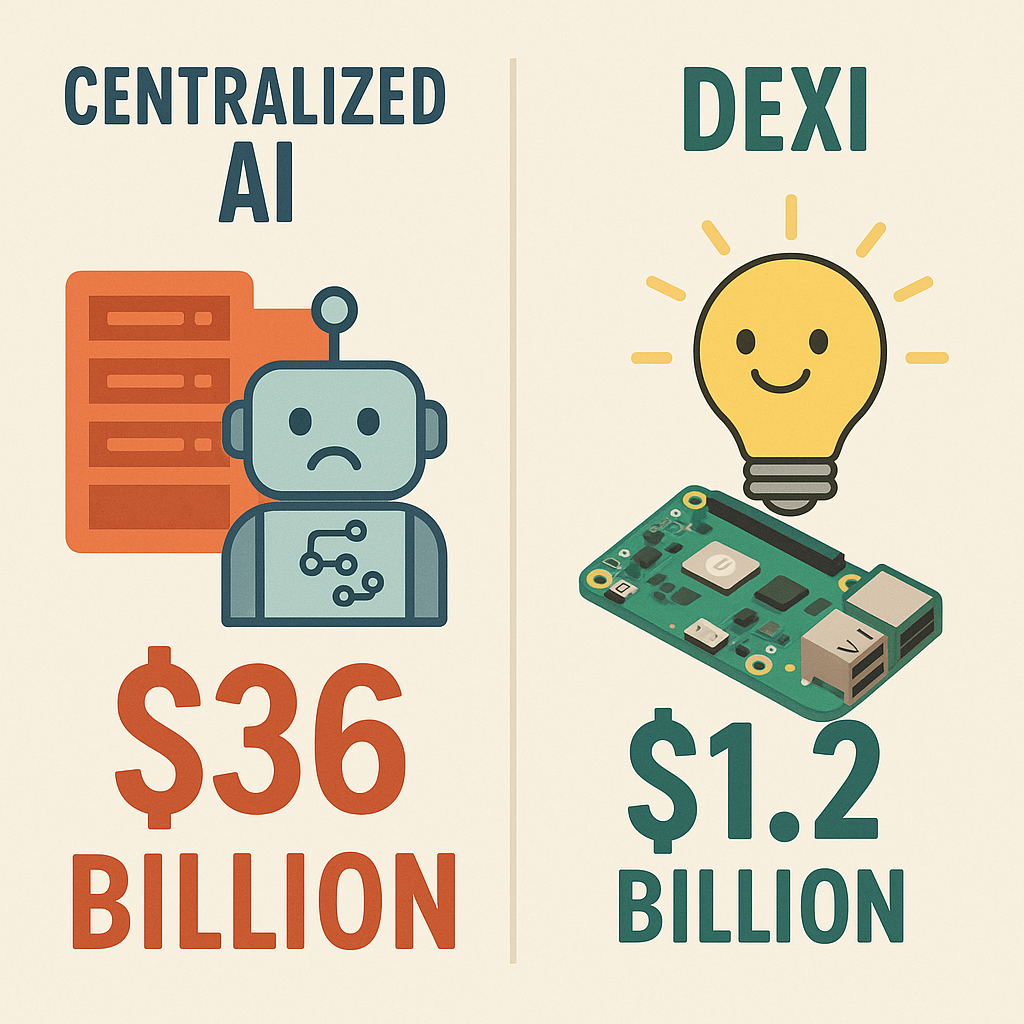

Cost Comparison: U.S.

| Metric | Centralized AI | DexI |

|---|---|---|

| Monthly National Cost | $3,000,000,000 | $100,000,000 |

| Annual Cost | $36 Billion | $1.2 Billion |

| Energy Draw (GW) | ~4.5–7.0 GW | <0.3 GW |

DexI could cut national energy consumption up to ~90%+ while preserving DexI functionality locally.

Hidden Costs DexI Avoids

| Cost Area | Centralized AI | DexI |

|---|---|---|

| Cloud bandwidth fees | Yes | None (local sync) |

| Data center cooling | Yes | None |

| API access/token limits | Yes | None |

| Privacy/surveillance risk | Yes | None |

TL;DR:

DexI can slash AI operating costs from ~$30/mo/user to ~$1, cutting power use, cloud fees, and surveillance risk — at both household and national scale.